Surf-D: Generating High-Quality Surfaces of Arbitrary Topologies Using Diffusion Models

* Equal Contributions

Abstract

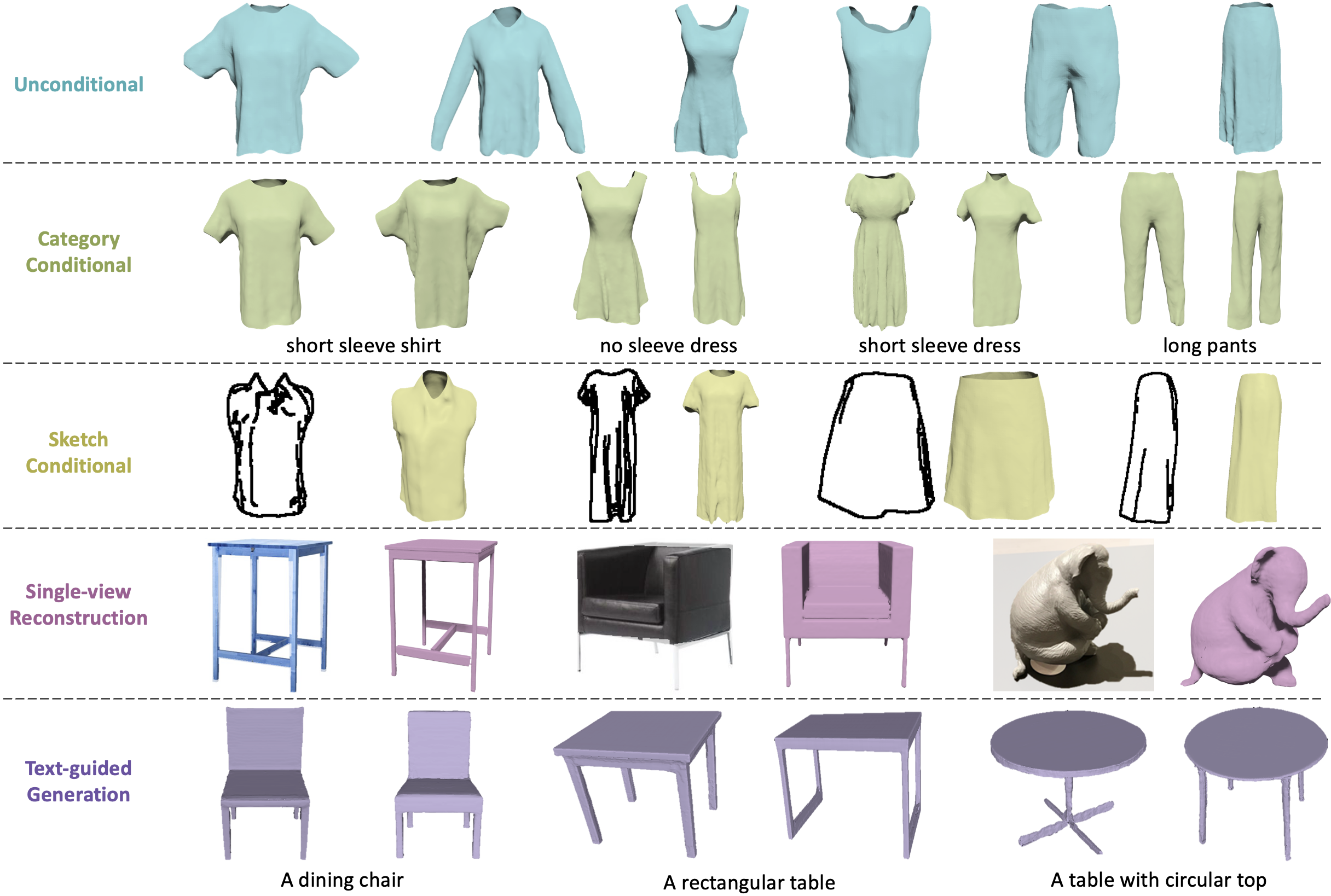

Our method achieves high-quality Surface generation for detailed geometry and various topology using a Diffusion model. It achieves SOTA performance in various shape generation tasks, including unconditional generation, category conditional generation, sketch conditional shape generation, single-view reconstruction, and text-guided shape generation.

We present Surf-D, a novel method for generating high-quality 3D shapes as Surfaces with arbitrary topologies using Diffusion models. Previous methods explored shape generation with different representations and they suffer from limited topologies and poor geometry details. To generate high-quality surfaces of arbitrary topologies, we use the Unsigned Distance Field (UDF) as our surface representation to accommodate arbitrary topologies. Furthermore, we propose a new pipeline that employs a point-based AutoEncoder to learn a compact and continuous latent space for accurately encoding UDF and support high-resolution mesh extraction. We further show that our new pipeline significantly outperforms the prior approaches to learning the distance fields, such as the grid-based AutoEncoder, which is not scalable and incapable of learning accurate UDF. In addition, we adopt a curriculum learning strategy to efficiently embed various surfaces. With the pretrained shape latent space, we employ a latent diffusion model to acquire the distribution of various shapes. Extensive experiments are presented on using Surf-D for unconditional generation, category conditional generation, image conditional generation, and text-to-shape tasks. The experiments demonstrate the superior performance of Surf-D in shape generation across multiple modalities as conditions.

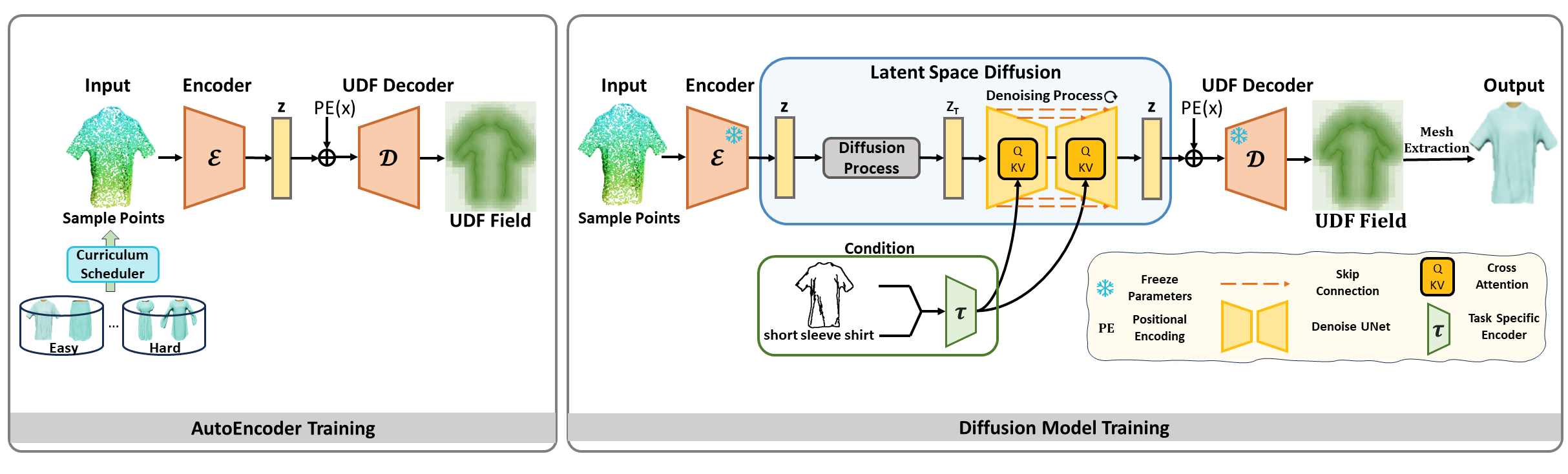

Framework

In our framework, we first encode surface points into a latent code \( z \) by our encoder \( \mathcal{E} \). A curriculum scheduler helps to train our model in an easy-to-hard sample order. Then we train the diffusion model in our latent space and various conditions can be added by a task-specific encoder \( \tau \). Finally, the sampled latent code \( z \) will be decoded to a UDF field for mesh extraction by our UDF decoder \( \mathcal{D} \).

Unconditional Generation

By unconditional sampling latent codes in latent space, Surf-D can produce high-quality and diverse shapes. We also calculate their average CD to each object in the training set to confirm that our model is capable of producing unique shapes.

Category Conditional Generation

Given the category condition, Surf-D generates different categories of detailed 3D shapes with high-quality and diversity.

Sketch Conditional Generation

Under the sketch images condition, Surf-D generates higher quality and detailed results aligned with input sketch with arbitrary topology.

Generation for Virtual Try-on

We explore more applications that Surf-D can be applied to. As shown in the video, the clothes generated by Surf-D can be used for virtual try-on with high quality and fidelity. Imagine that you can just use sketches to generate whatever clothes you want, then put on your own avatar to try-on. Although it may sound crazy, this can be achieved with our proposed Surf-D!

Single-view Reconstruction

Given single-view images of objects, Surf-D can produce high-quality results faithfully aligned with input images.

Text-guided Generation

Give the text description of objects, Surf-D produces high-quality results aligned with input texts.

Check out our paper for more details.

Citation

@article{yu2023surf,

title={Surf-D: High-Quality Surface Generation for Arbitrary Topologies using Diffusion Models},

author={Yu, Zhengming and Dou, Zhiyang and Long, Xiaoxiao and Lin, Cheng and Li, Zekun and Liu, Yuan and Müller, Norman and Komura, Taku and Habermann, Marc and Theobalt, Christian and others},

journal={arXiv preprint arXiv:2311.17050},

year={2023}

}